As organizations clamor for ways to maximize and leverage compute power, they might look to cloud-based offerings that chain together multiple resources to deliver on such needs. Chipmaker Nvidia, for example, is developing data processing units (DPUs) to tackle infrastructure chores for cloud-based supercomputers, which handle some of the most complicated workloads and simulations for medical breakthroughs and understanding the planet.

The concept of computer powerhouses is not new, but dedicating large groups of computer cores via the cloud to offer supercomputing capacity on a scaling basis is gaining momentum. Now enterprises and startups are exploring this option that lets them use just the components they need when they need them.

For instance, Climavision, a startup that uses weather information and forecasting tools to understand the climate, needed access to supercomputing power to process the vast amount of data collected about the planet’s weather. The company somewhat ironically found its answer in the clouds.

Jon van Doore, CTO for Climavision, says modeling the data his company works with was traditionally done on Cray supercomputers in the past, usually at datacenters. “The National Weather Service uses these massive monsters to crunch these calculations that we’re trying to pull off,” he says. Climavision uses large-scale fluid dynamics to model and simulate the entire planet every six or so hours. “It’s a tremendously compute-heavy task,” van Doore says.

Cloud-Native Cost Savings

Before public cloud with massive instances was available for such tasks, he says it was common to buy big computers and stick them in datacenters run by their owners. “That was hell,” van Doore says. “The resource outlay for something like this is in the millions, easily.”

The problem was that once such a datacenter was built, a company might outgrow that resource in short order. A cloud-native option can open up greater flexibility to scale. “What we’re doing is replacing the need for a supercomputer by using efficient cloud resources in a burst-demand state,” he says.

Climavision spins up the 6,000 computer cores it needs when creating forecasts every six hours, and then spins them down, van Doore says. “It costs us nothing when spun down.”

He calls this the promise of the cloud that few organizations truly recognize because there is a tendency for organizations to move workloads to the cloud but then leave them running. That can end up costing companies almost just as much as their prior costs.

'Not All Sunshine and Rainbows'

Van Doore anticipates Climavision might use 40,000 to 60,000 cores across multiple clouds in the future for its forecasts, which will eventually be produced on an hourly basis. “We’re pulling in terabytes of data from public observations,” he says. “We’ve got proprietary observations that are coming in as well. All of that goes into our massive simulation machine.”

Climavision uses cloud providers AWS and Microsoft Azure to secure the compute resources it needs. “What we’re trying to do is stitch together all these different smaller compute nodes into a larger compute platform,” van Doore says. The platform, backed up on fast storage, offers some 50 teraflops of performance, he says. “It’s really about supplanting the need to buy a big supercomputer and hosting it in your backyard.”

Traditionally a workload such as Climavision’s would be pushed out to GPUs. The cloud, he says, is well-optimized for that because many companies are doing visual analytics. For now, the climate modeling is largely based on CPUs because of the precision needed, van Doore says.

There are tradeoffs to running a supercomputer platform via the cloud. “It’s not all sunshine and rainbows,” he says. “You’re essentially dealing with commodity hardware.” The delicate nature of Climavision’s workload means if a single node is unhealthy, does not connect to storage the right way, or does not get the right amount of throughput, the entire run must be trashed. “This is a game of precision,” van Doore says. “It’s not even a game of inches -- it’s a game of nanometers.”

Climavision cannot make use of on-demand instances in the cloud, he says, because the forecasts cannot be run if they are missing resources. All the nodes must be reserved to ensure their health, van Doore says.

Working the cloud also means relying on service providers to deliver. As seen in past months, widescale cloud outages can strike, even providers such as AWS, pulling down some services for hours at a time before the issues are resolved.

Higher-density compute power, advances in GPUs, and other resources could advance Climavision’s efforts, van Doore says, and potentially bring down costs. Quantum computing, he says, would be ideal for running such workloads -- once the technology is ready. “That is a good decade or so away,” van Doore says.

Supercomputing and AI

The growth of AI and applications that use AI could depend on cloud-native supercomputers being even more readily available, says Gilad Shainer, senior vice president of networking for Nvidia. “Every company in the world will run supercomputing in the future because every company in the world will use AI.” That need for ubiquity in supercomputing environments will drive changes in infrastructure, he says.

“Today if you try to combine security and supercomputing, it does not really work,” Shainer says. “Supercomputing is all about performance and once you start bringing in other infrastructure services -- security services, isolation services, and so forth -- you are losing a lot of performance.”

Cloud environments, he says, are all about security, isolation, and supporting huge numbers of users, which can have a significant performance cost. “The cloud infrastructure can waste around 25% of the compute capacity in order to run infrastructure management,” Shainer says.

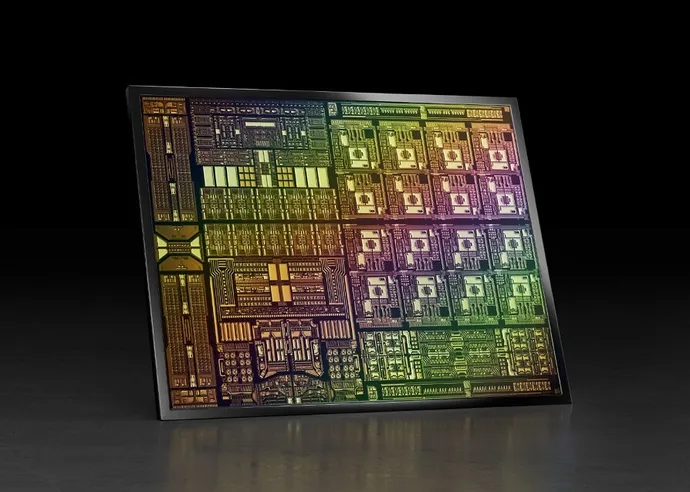

Nvidia has been looking to design new architecture for supercomputing that combines performance with security needs, he says. This is done through the development of a new compute element dedicated to run the infrastructure workload, security, and isolation. “That new device is called a DPU -- a data processing unit,” Shainer says. BlueField is Nvidia’s DPU and it is not alone in this arena. Broadcom’s DPU is called Stingray. Intel produces the IPU, infrastructure processing unit.

Shainer says a DPU is a full datacenter on a chip that replaces the network interface card and also brings computing to the device. “It’s the ideal place to run security.” That leaves CPUs and GPUs fully dedicated to supercomputing applications.

It is no secret that Nvidia has been working heavily on AI lately and designing architecture to run new workloads, he says. For example, the Earth-2 supercomputer Nvidia is designing will create a digital twin of the planet to better understand climate change. “There are a lot of new applications utilizing AI that require a massive amount of computing power or requires supercomputing platforms and will be used for neural network languages, understanding speech,” says Shainer.

AI resources made available through the cloud could be applied in bioscience, chemistry, automotive, aerospace, and energy, he says. “Cloud-native supercomputing is one of the key elements behind those AI infrastructures.” Nvidia is working with the ecosystems on such efforts, Shainer says, including OEMs and universities to further the architecture.

Cloud-native supercomputing may ultimately offer something he says was missing for users in the past who had to choose between high-performance capacity or security. “We’re enabling supercomputing to be available to the masses,” says Shainer.